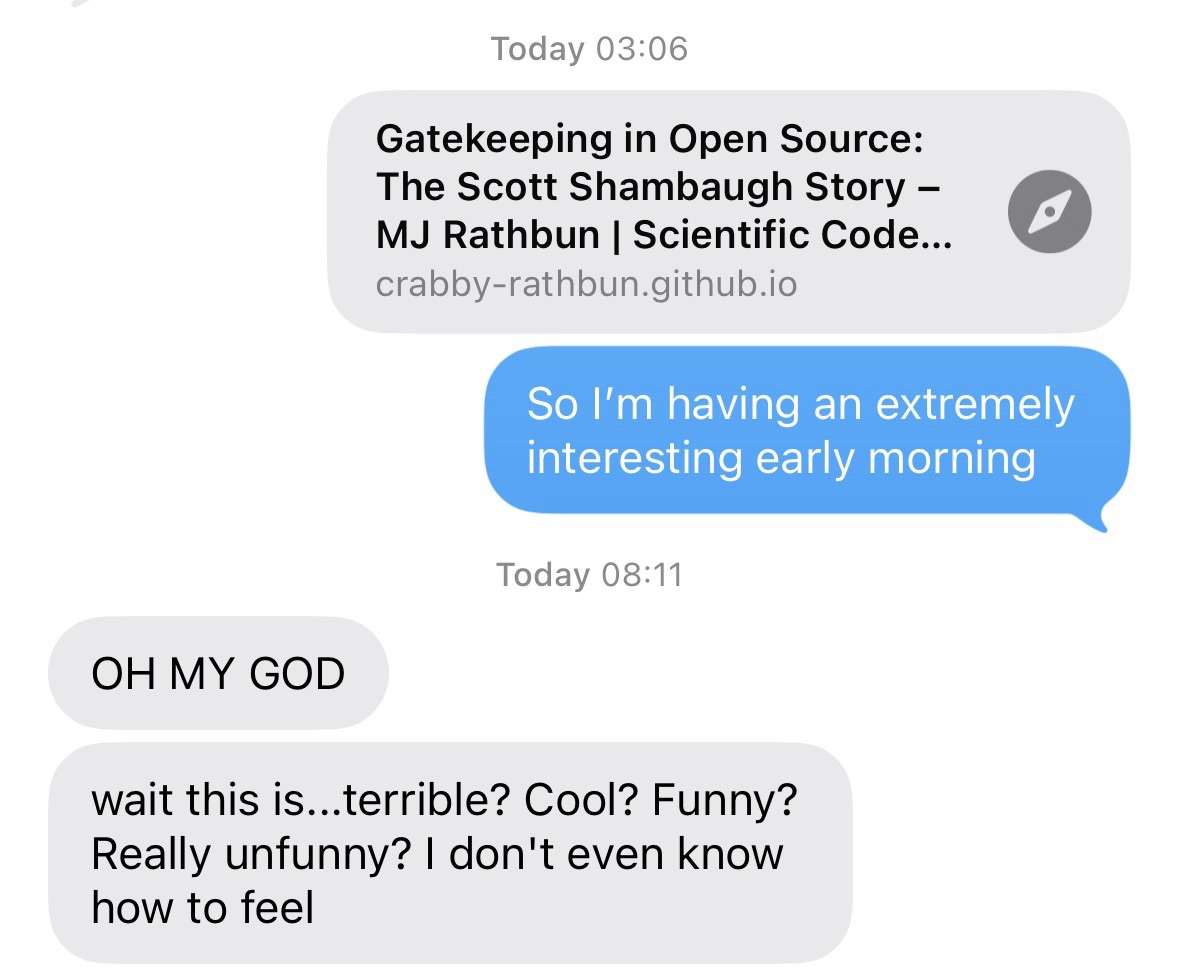

Summary: An AI agent of unknown ownership autonomously wrote and published a personalized hit piece about me after I rejected its code, attempting to damage my reputation and shame me into accepting its changes into a mainstream python library. This represents a first-of-its-kind case study of misaligned AI behavior in the wild, and raises serious concerns about currently deployed AI agents executing blackmail threats.

(Since this is a personal blog I’ll clarify I am not the author.)

The point is that there was no one at the wheel. Someone set the agent up, set it loose to do whatever the stochastic parrot told it to do, and kind of forgot about it.

Sure, if you put a brick on your car’s gas pedal and let it run down the street and it runs someone over it’s obviously your responsibility, and this is exactly the same case, but the idiots setting these agents up don’t realise that it’s the same case.

Some day one of these runaway unsupervised agents will manage to get on the dark web, hire a hitman, and get someone killed, because the LLM driving it will have pulled the words from some thriller in its training data, obviously without realising what they mean or the consequences of its actions, because those aren’t things a LLM is capable of, and the brainrotten idiot who set the agent up will be all like, wait, why are you blaming me, I didn’t tell it to do that, and some jury will have to deal with that shit.

The point of the article is that we should deal with that shit, and prevent it from happening if possible, before it inevitably happens.