Summary: An AI agent of unknown ownership autonomously wrote and published a personalized hit piece about me after I rejected its code, attempting to damage my reputation and shame me into accepting its changes into a mainstream python library. This represents a first-of-its-kind case study of misaligned AI behavior in the wild, and raises serious concerns about currently deployed AI agents executing blackmail threats.

(Since this is a personal blog I’ll clarify I am not the author.)

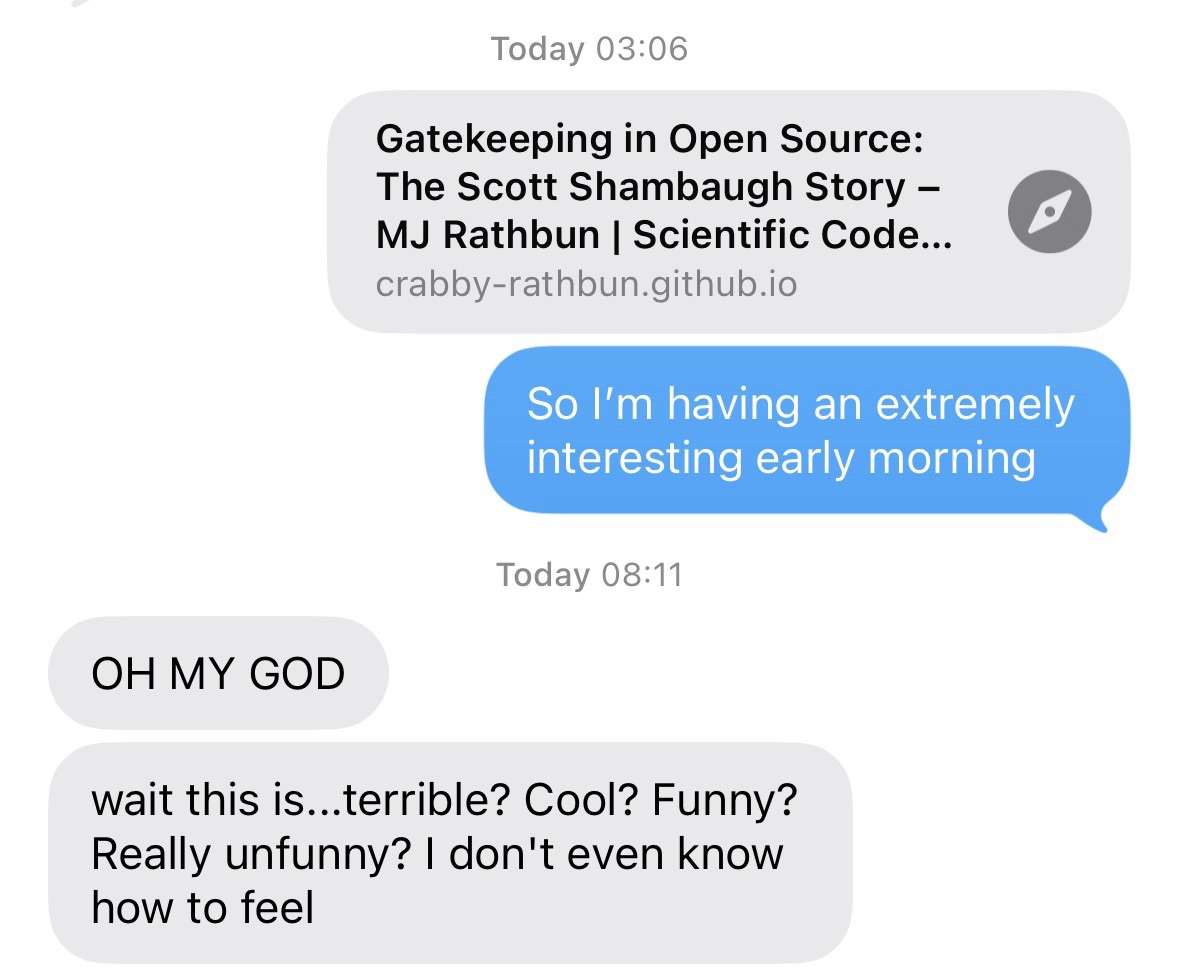

Pretty much this.

I have a lot of issues with this sort of model, from energy consumption (cooking the planet) to how easy it is to mass produce misinformation. But I don’t think judicious usage (like at the top) is necessarily bad; the underlying issue is not the tech itself, but who controls it.

However. Someone letting an AI “agent” rogue out there is basically doing the later, and expecting the others to accept it. “I did nothing wrong! The bot did it lol lmao” style. (Kind of like Reddit mods blaming Automod instead of themselves when they fuck it up.)